Live data application

Introduction

We are going to look at our platform from the point of view of the data and how our design choices influence the data that are created. Then we will look at how this data changes when we interact with the application. Lastly, we will look at how to access this data through the various means available in our platform.

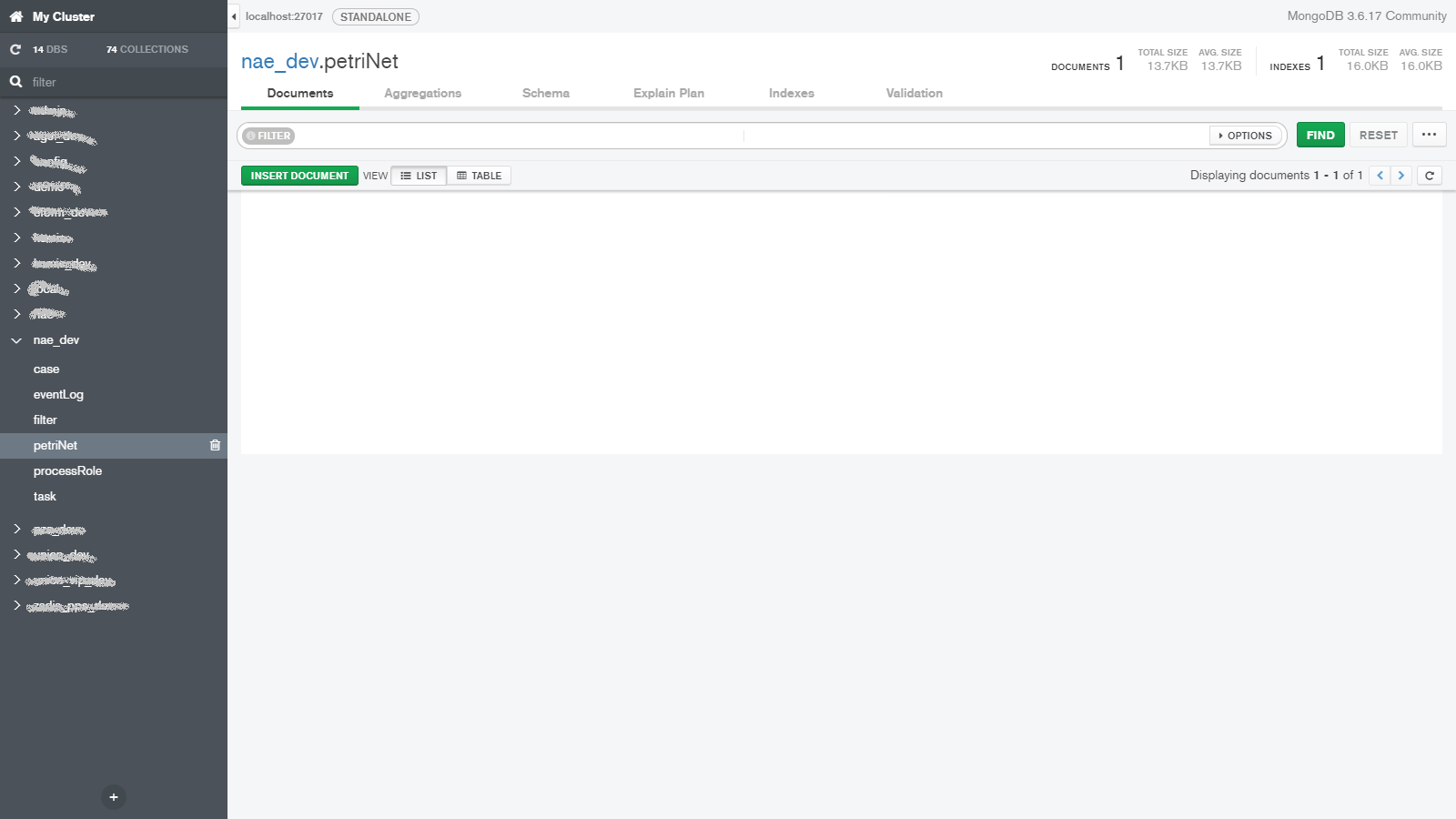

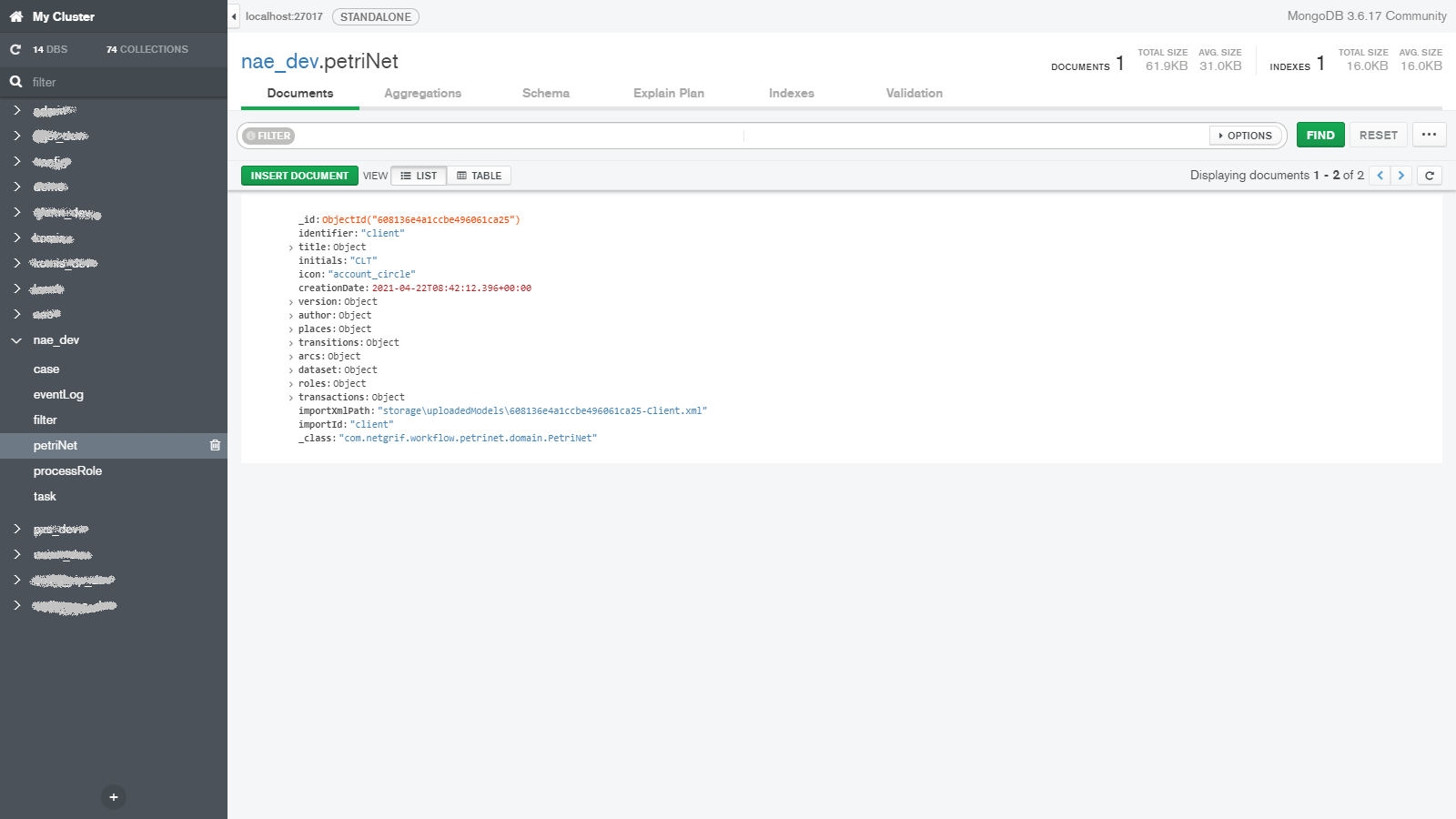

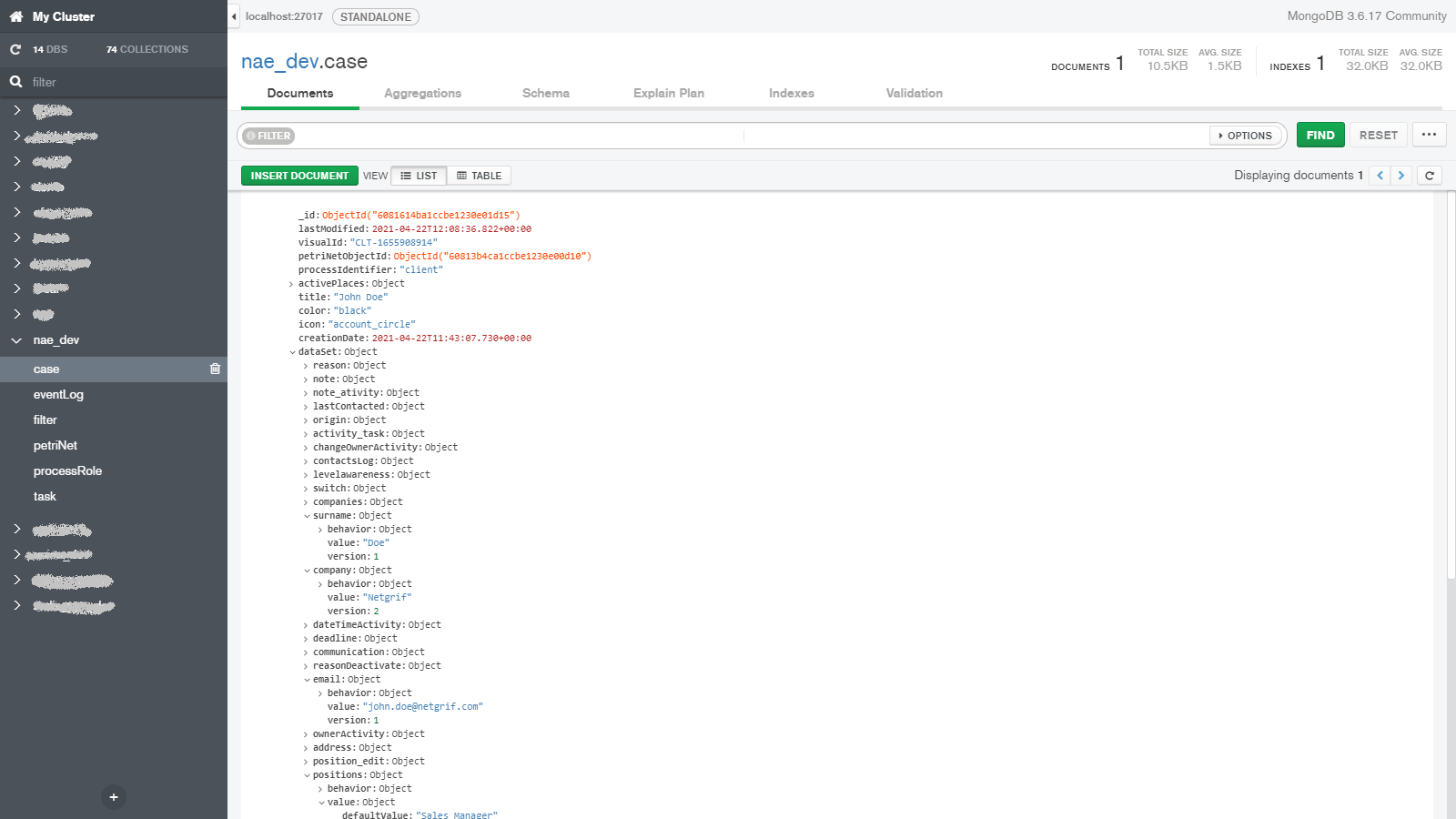

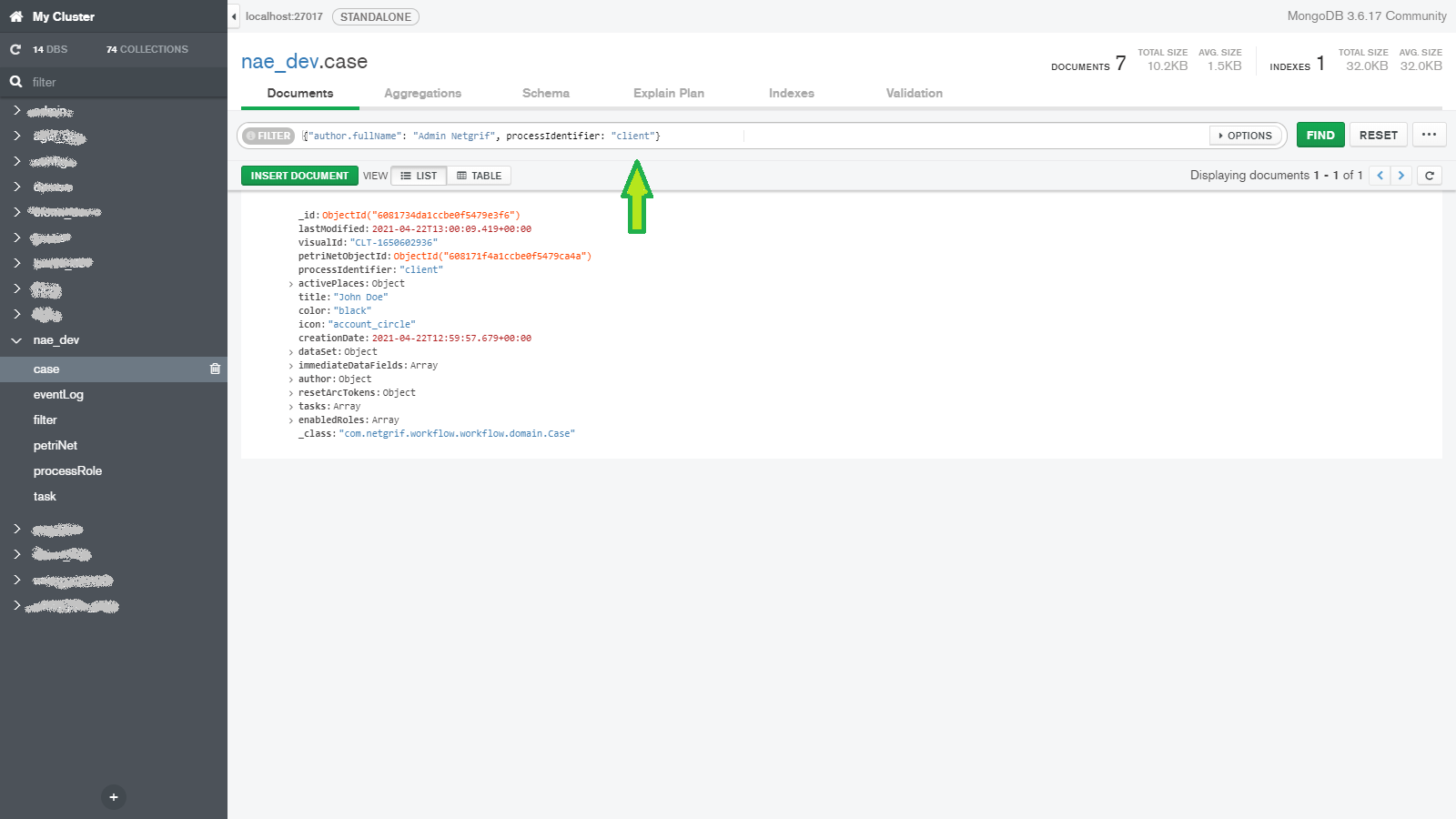

When we talk about data, we have to at least mention how it is stored and since this blog aims to provide some technical insight we have to talk a little bit about the databases. To keep things brief we will only mention the two most important databases used by our platform. To store virtually all the important data we use MongoDB.

Here we store the three most important things in our platform alongside other data, such as application logs (read more in log blog). The three most important things (in no particular order) are each stored in their own MongoDB collection and they are:

- Processes – the process models themselves

- Cases – the individual instances of the processes

- Tasks – the currently executable operations available in the cases

If none of these ring any bells, we recommend looking at our blog that explains the fundamentals of our platform and then return back to this blog, to get the most out of it. The second database that deserves a mention in this blog is Elasticsearch and we use it to store indexed information about the data in MongoDB, to provide faster, and thus better performance of our platform.

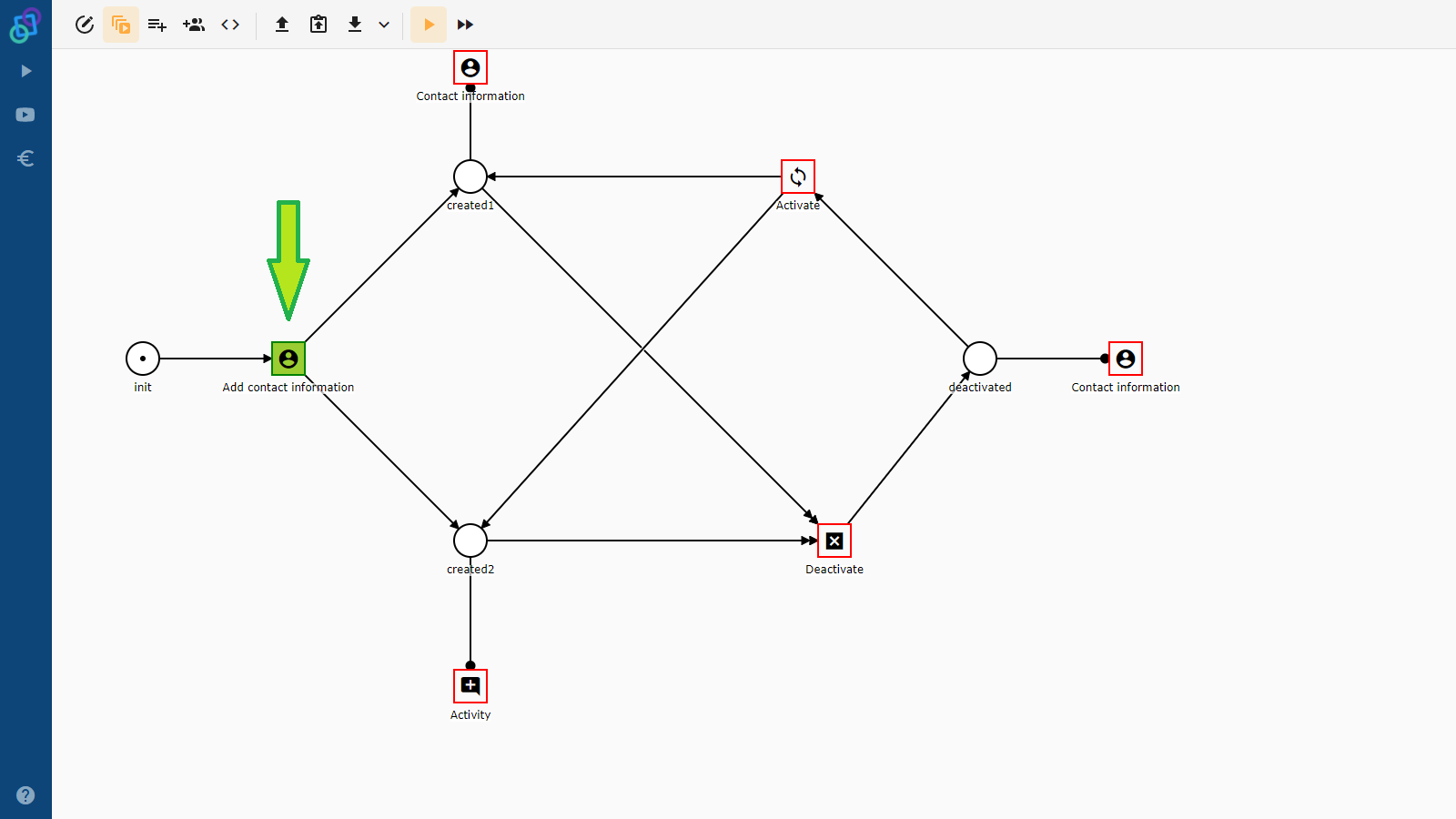

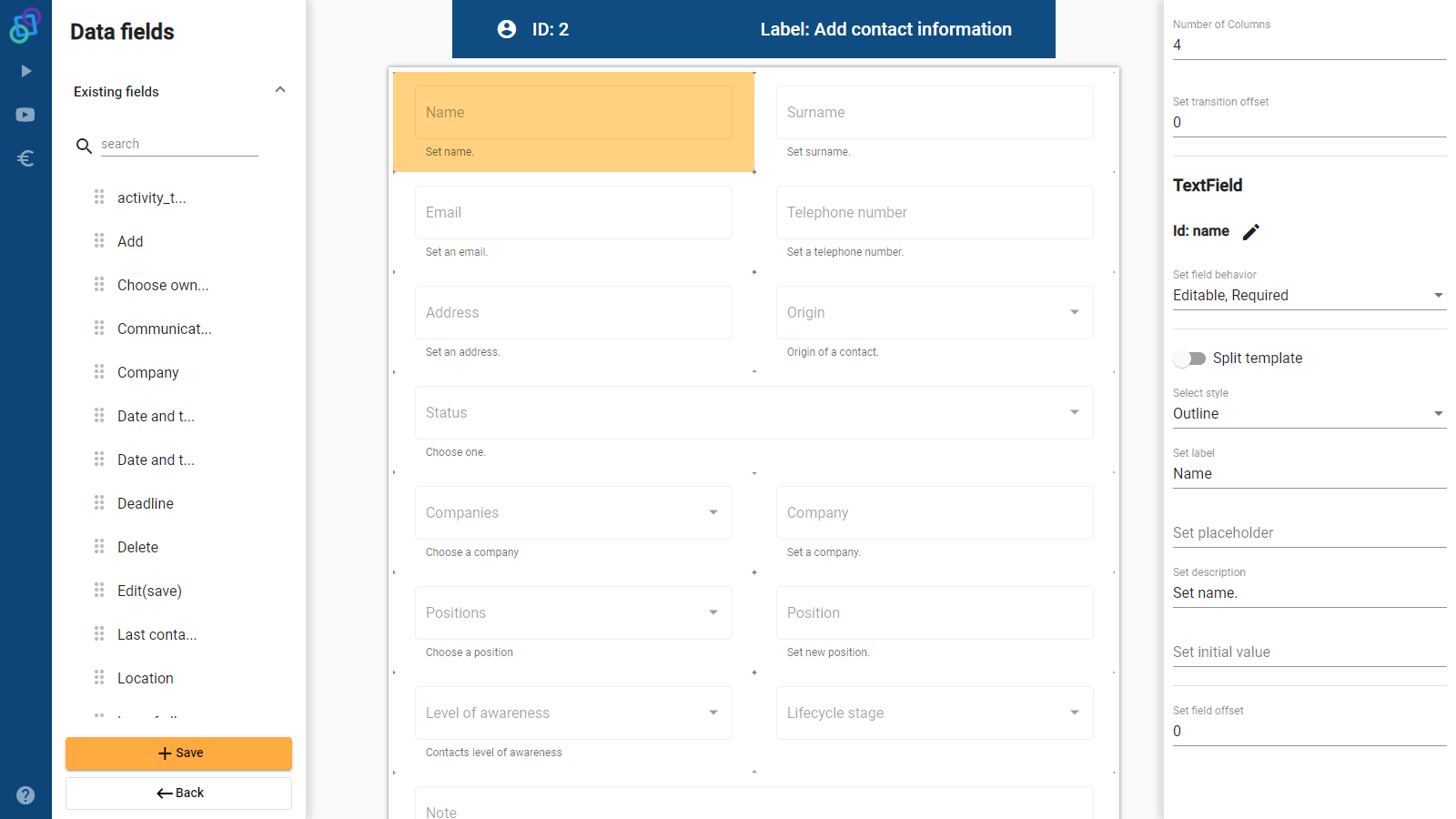

Empty database

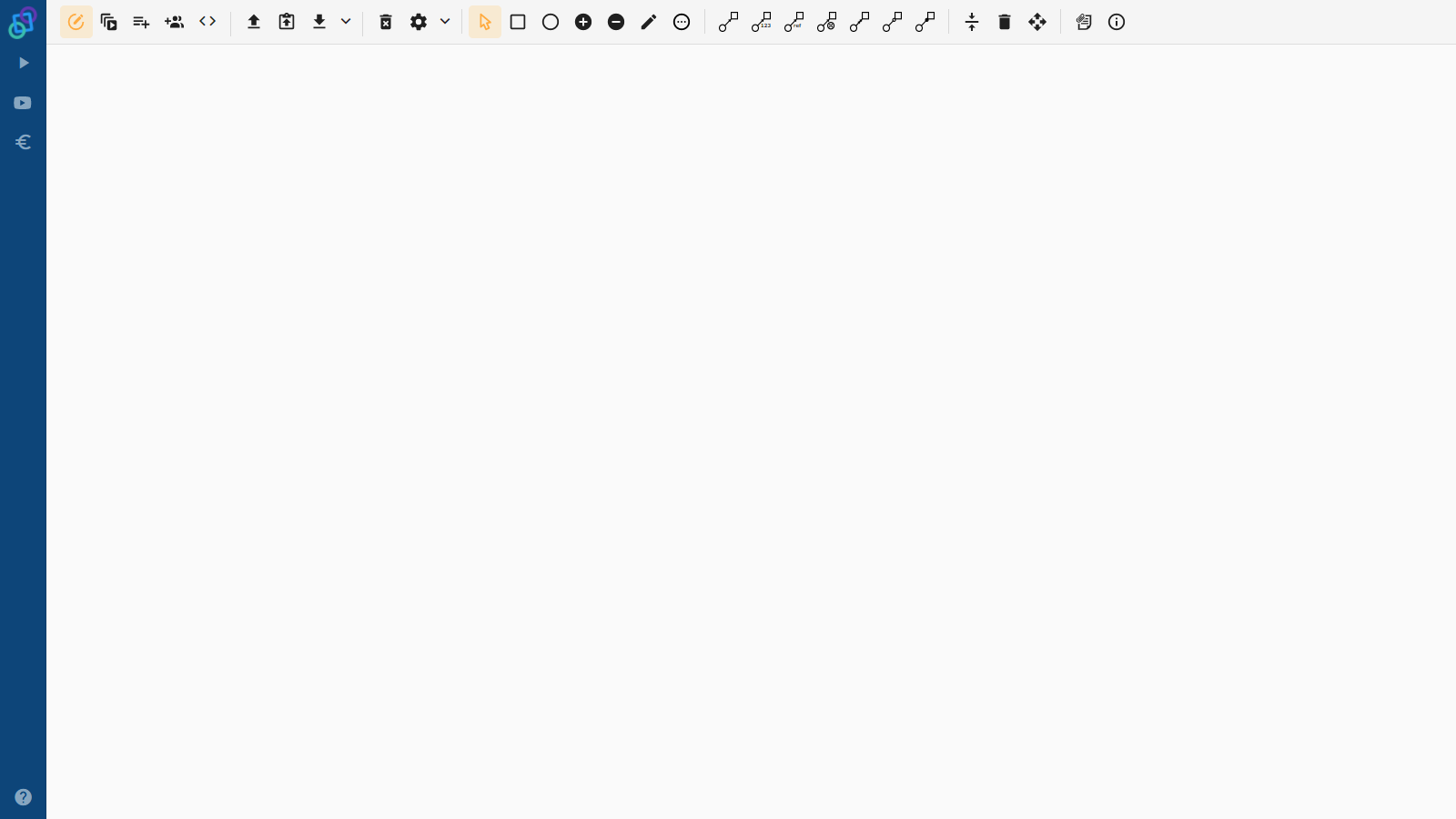

Let’s take a look at how our design decisions influence the data created, processed and stored by our platform. When we start working on a new Petriflow based application the databases are virtually empty. Our journey begins in our process builder where we model business processes using Petri nets and other aspects of the Petriflow language. One of these aspects is the data variables and data references. If processes are analogies of classes, then data variables are analogies of class attributes. As such they dictate what manners of data will each instance (case) be able to store for itself.

Process creation

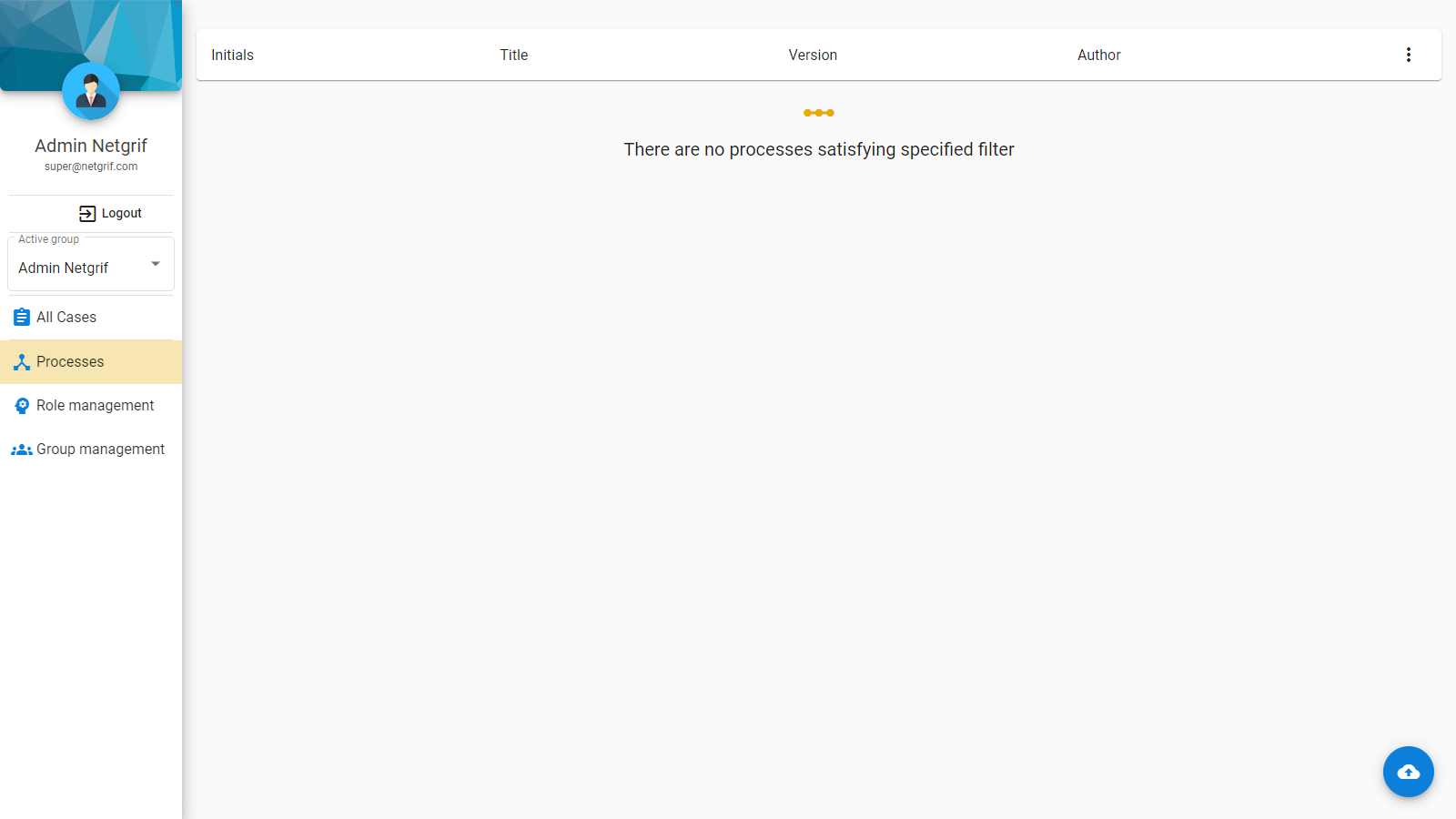

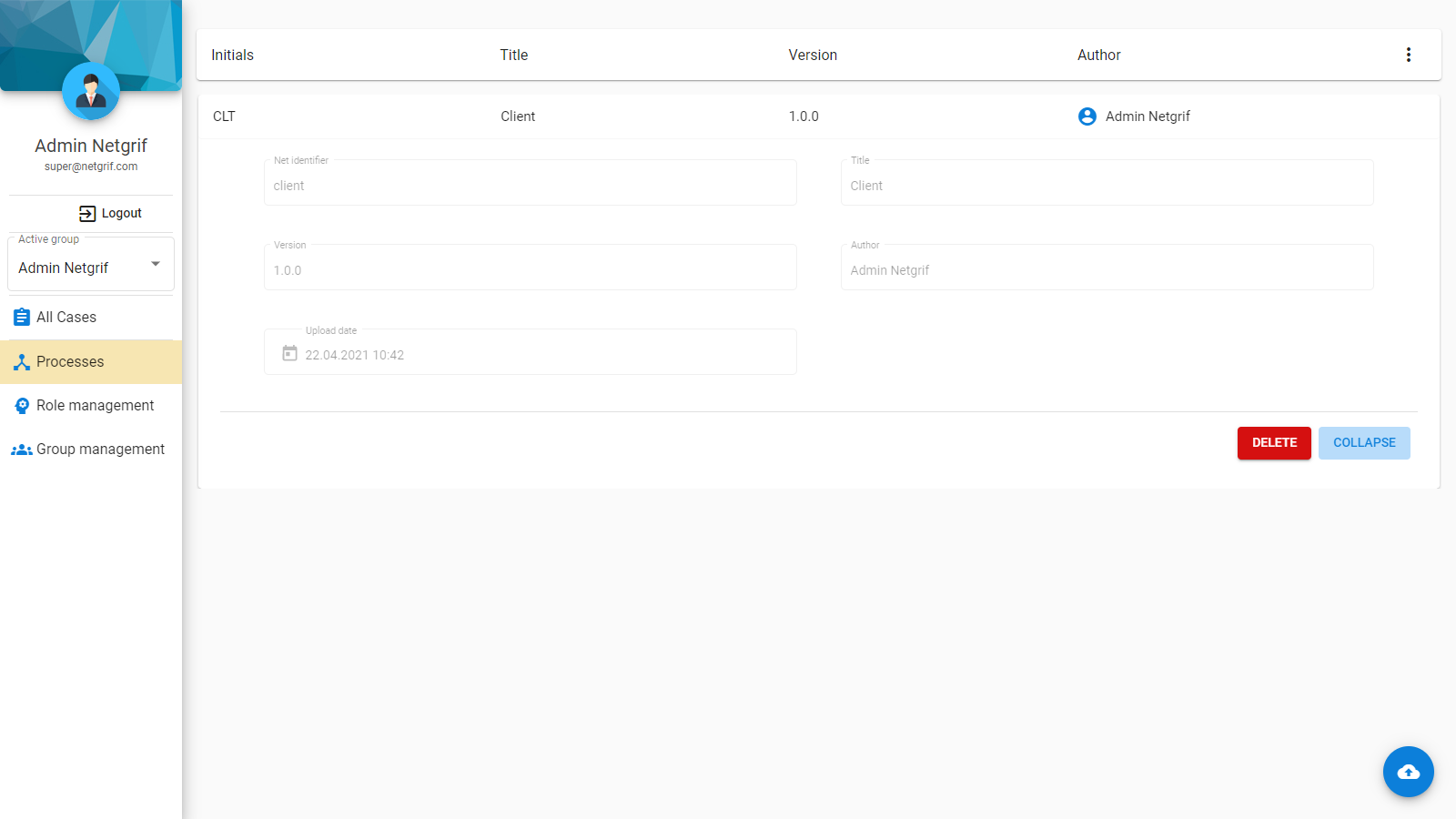

Once we have a process model prepared, we can upload it to our platform. Its representation is then stored in MongoDB so that we can use it as a template to create instances of it – the cases. Since the process defines what data variables the individual instances have, we can say it is an analogy of tables in traditional relational databases.

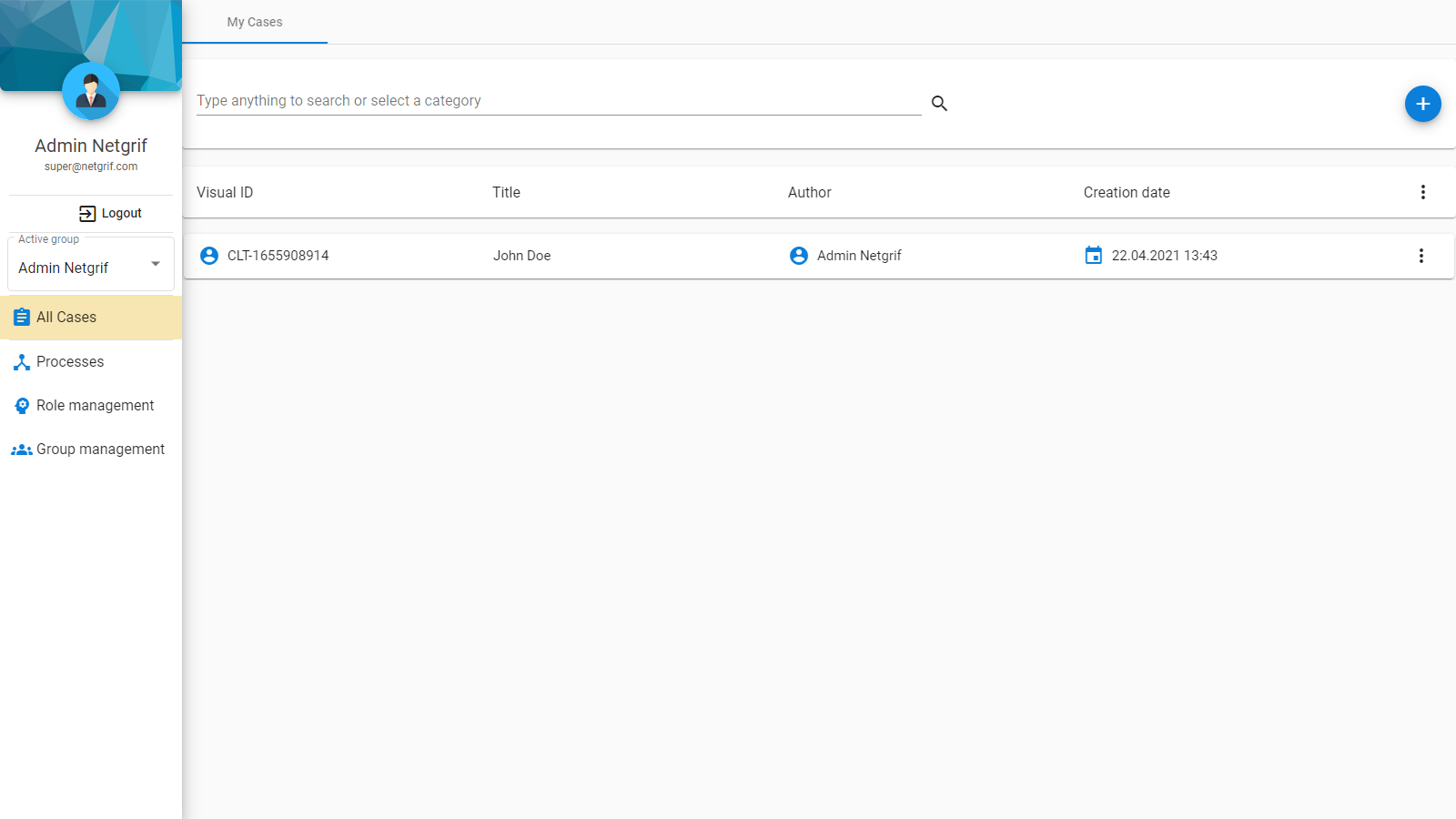

When the application contains at least one process, we can start creating the individual cases.

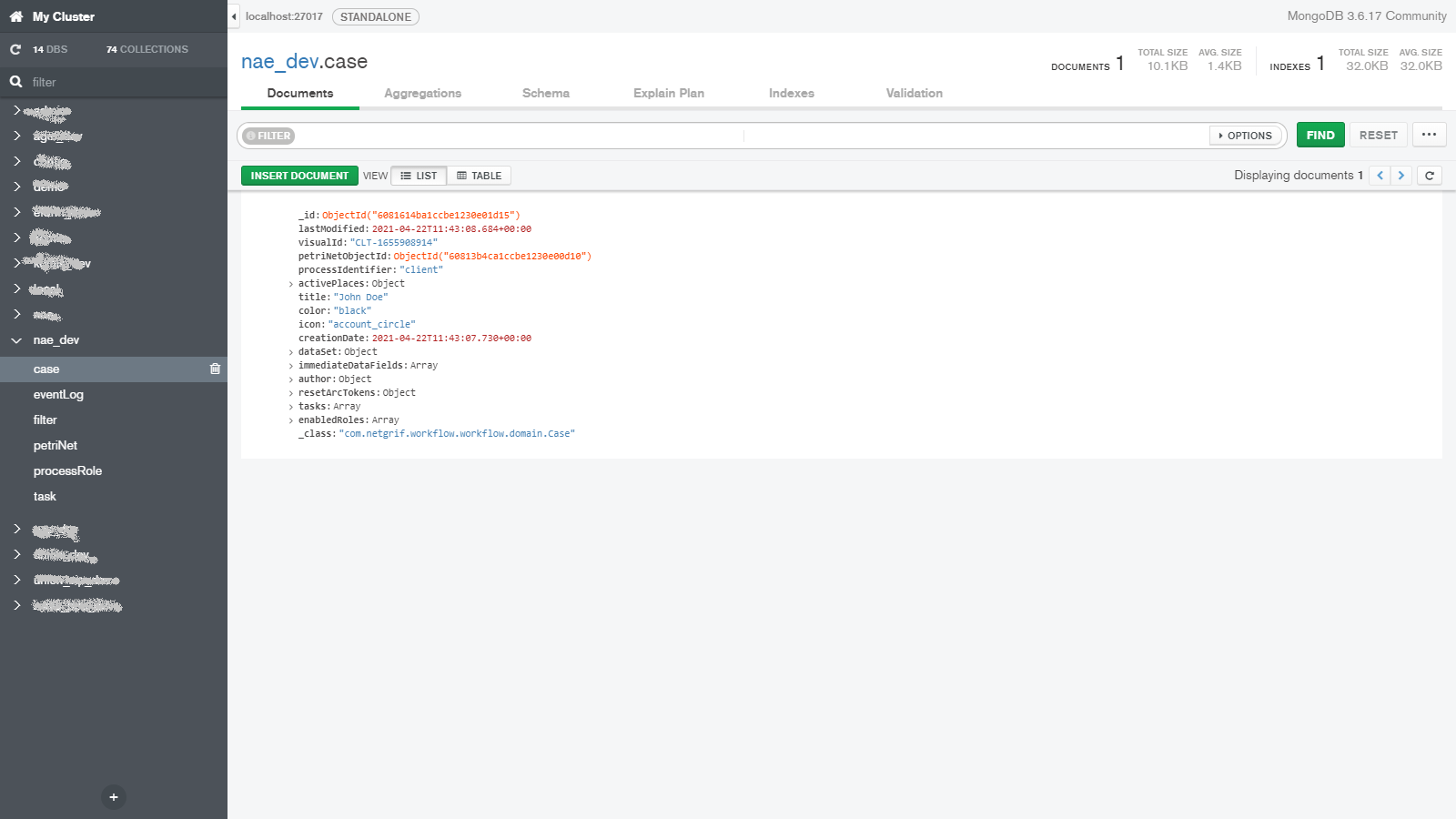

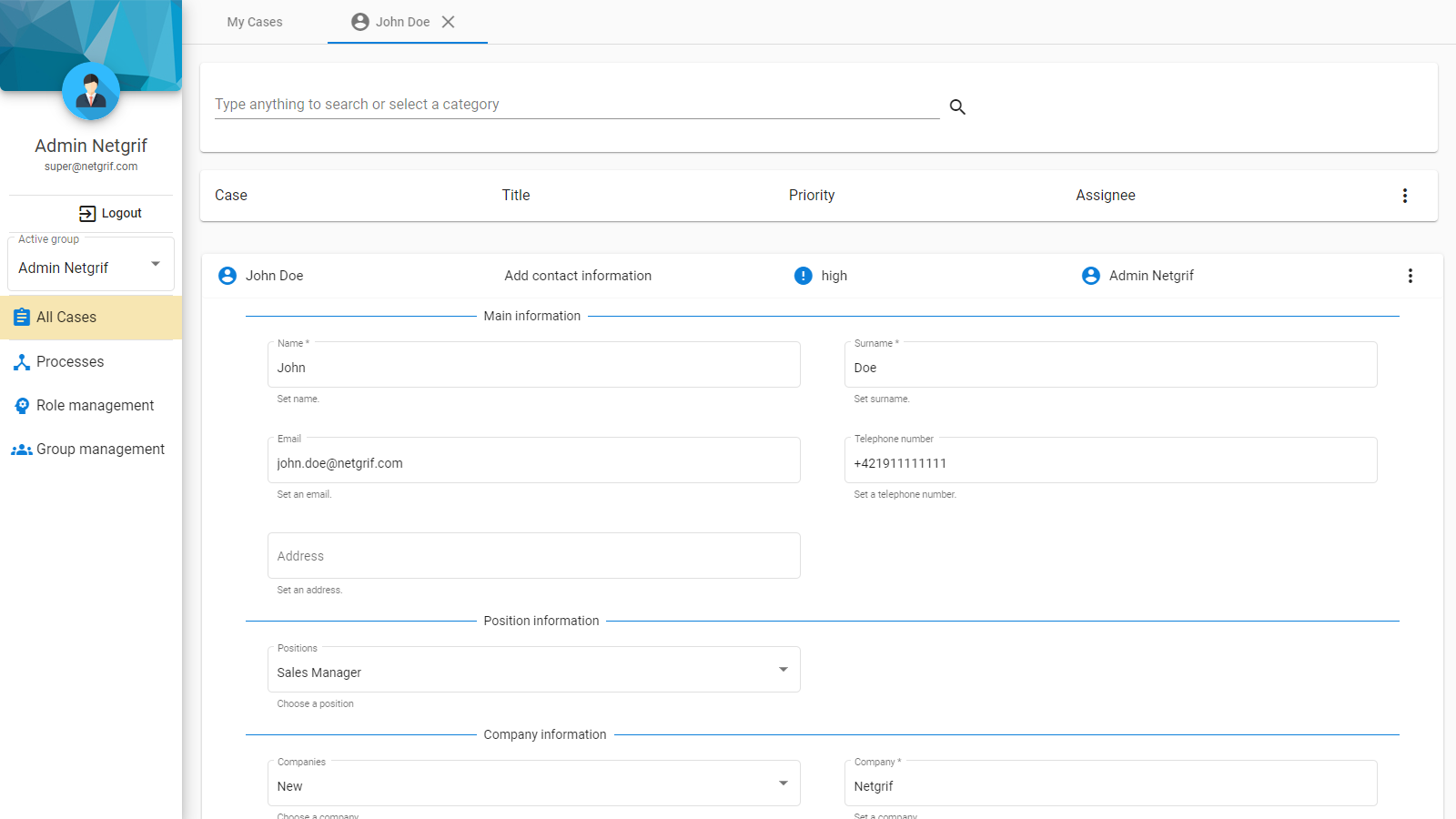

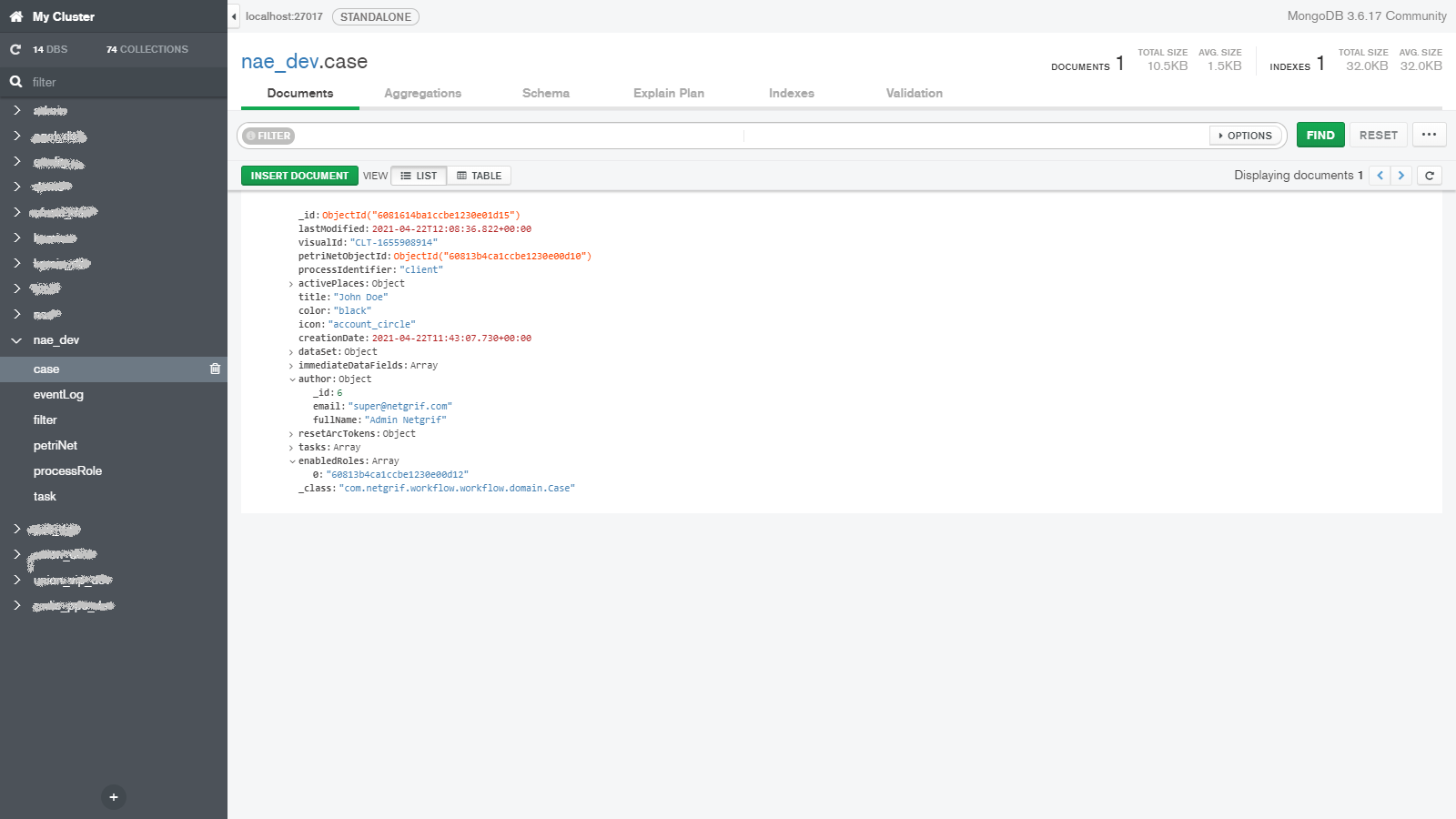

Case creation

To a user, the creation of a new case might seem trivial and simple, but if we are concerned about the changes in the stored data, the truth is far from it. The process that serves as a template for the case is loaded and a new case is created from it. If this case contains data variables, that have some initial values declared, they must be set into their values. Since the 5.0 release of the application engine, we can define actions that are triggered by the case creation event. If such actions are defined in our process, we need to execute them. Our application can define various rules with Drools rule engine, that should be executed when certain conditions are met. These conditions must be checked and the associated rules executed. The newly created case can then be stored in its MongoDB collection alongside the other cases in our application. This is not the end, as we need to be able to index the case for future use. A representation of the new case is created and stored in the Elasticsearch database. If we said, that processes are analogies of tables in RDBs, then cases are analogous to entries, or rows, of these tables. A process defines the structure of the data, and a case stores the values associated with one instance.

Tasks - the data forms

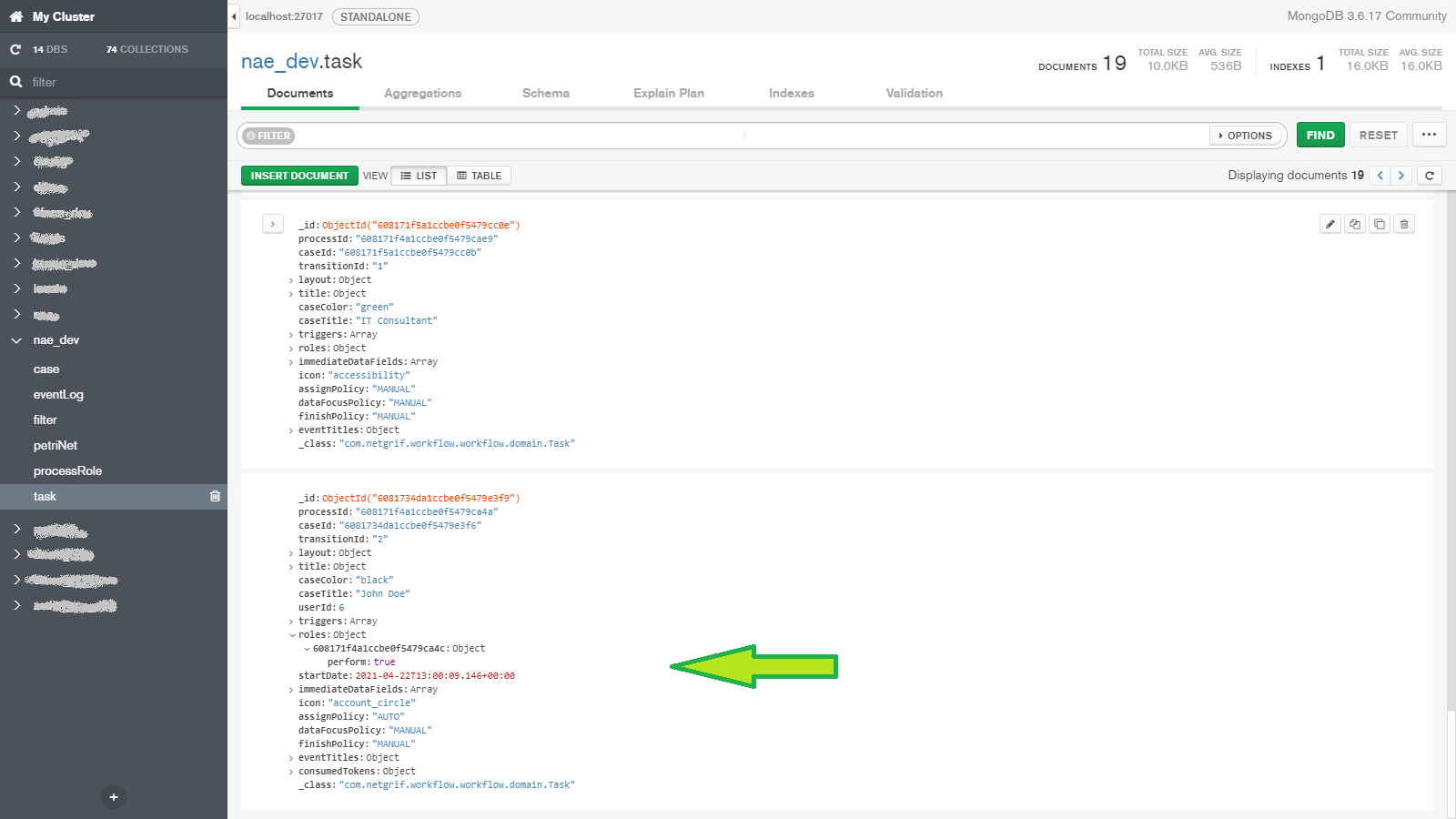

It is nice to have the data stored in a database but we also need the ability to interact with them. That is where tasks come in. Task objects are created whenever the marking of the underlying Petri net changes and they reflect the enabled transitions of the net. The initial tasks that correspond to the transitions enabled at the start of the process are created when a new case is created.

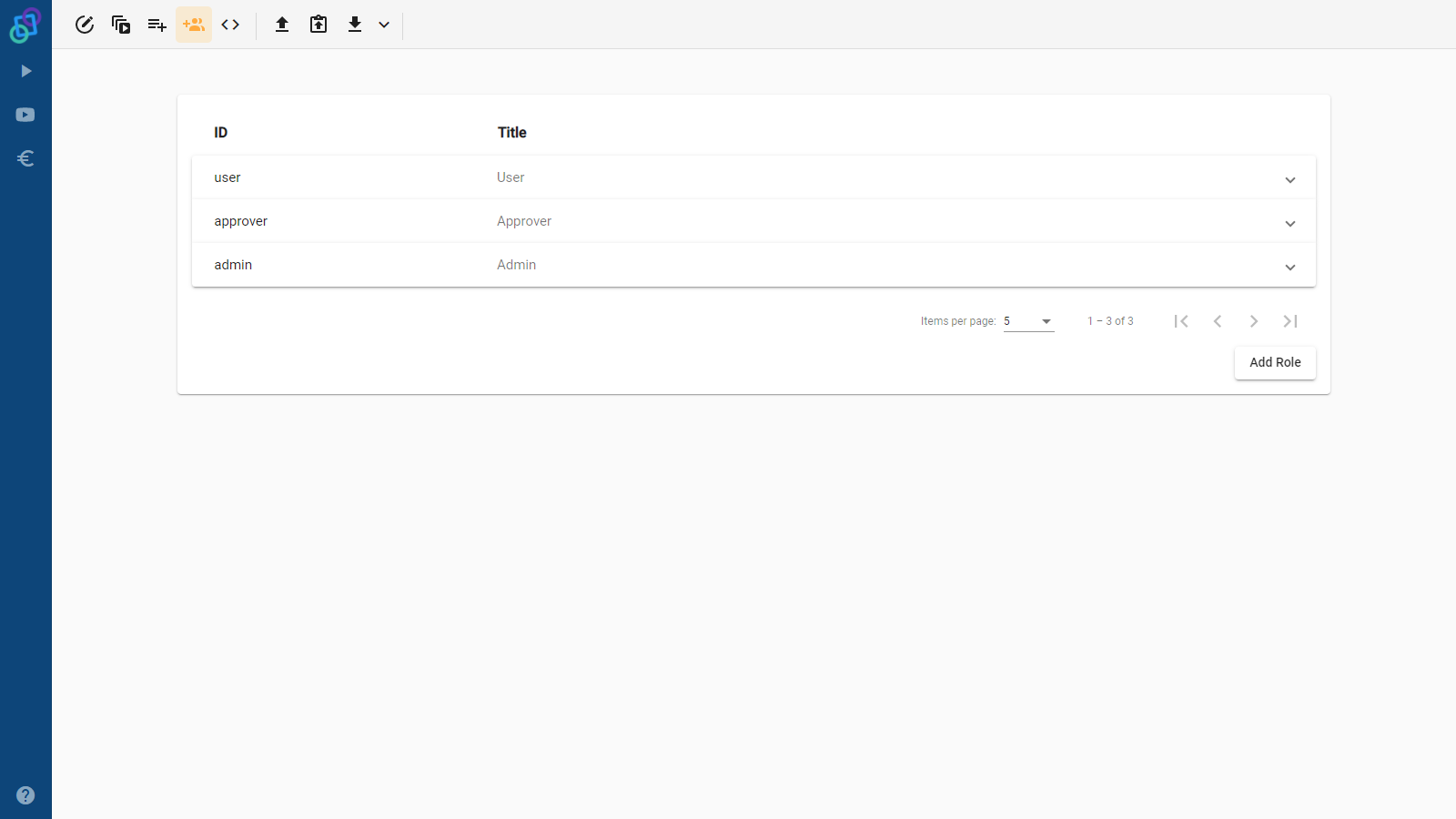

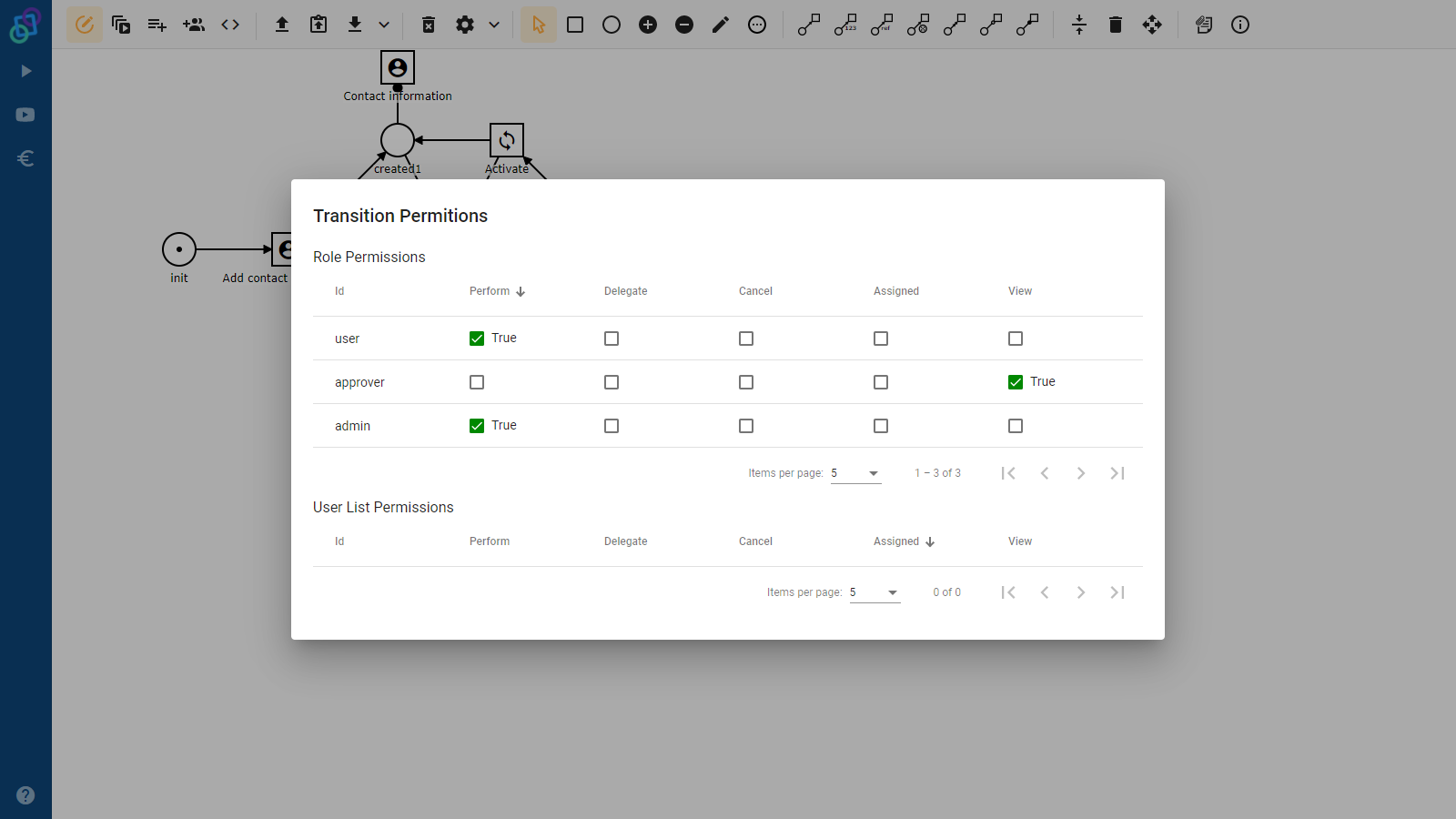

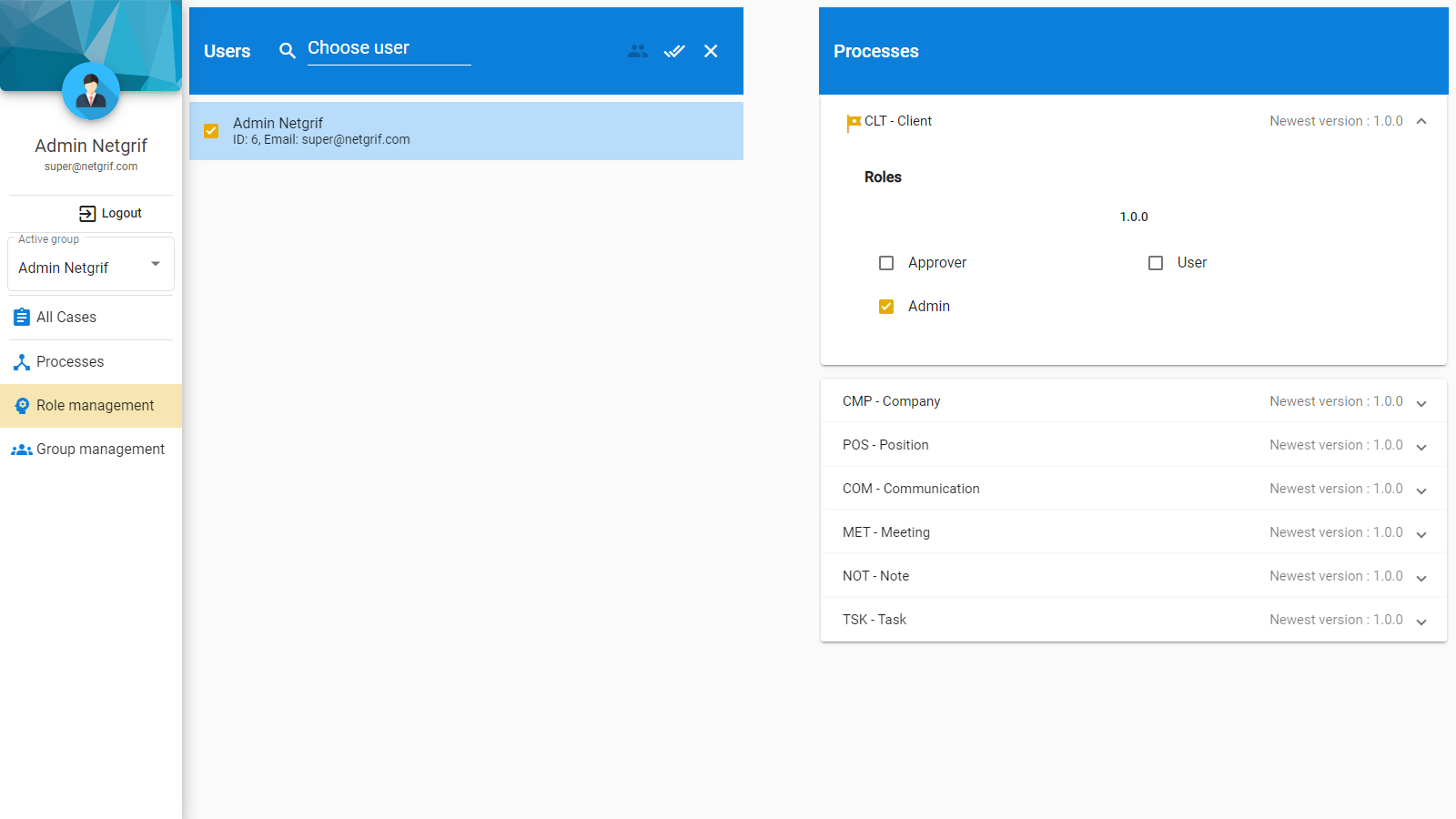

If we wanted to continue our analogy with RDBs, then we might say that tasks are similar to SELECT and INSERT statements of such databases. We can use process roles and user list data variables to restrict the access to individual tasks to a group of our users. These tasks then contain references to the data variables of our cases and these references define specific behaviour for each data reference. The values of the data variables of the case can be made only visible, so the users can read them, or they can be made editable, so the users can change their values and, by doing so, change the values stored in the case. If we want to restrict the accepted inputs, we can use our validation framework to only accept values with certain properties. The validations then prevent the users from setting invalid values into the data variables of the cases.

Task reference - the nested forms

While the tasks themselves do not store any of the case data in the database, they are a key element of the process-driven platform because of the task reference (task ref) data variable. This data variable type revolutionised the way we can design process-driven applications and expanded our options almost to infinity. Tasks contain the data references to data variables of their case object, which they display. Because of this, we can say that they represent forms in our application, that the users then interact with. The task ref can then be used to embed forms into other forms transparently for the user. The embedded forms still reference the variables of their case, and so the user can transparently manipulate data of other cases without even knowing about it. The data variables that can be referenced in a task are limited to the data variables of the process the task is located in. But if we reference a task ref variable, its value and therefore the embedded task, is not limited to tasks of the same case, not even of cases of the same process. This way we can create complex applications that share the same data, while keeping it stored in a single location.

When, who and how

Since our platform creates process-driven applications the tasks available in individual cases change over time based on the state of the underlying Petri net. This enables us to decide when, who and how can read or modify the data stored in the case and by extension in the database.

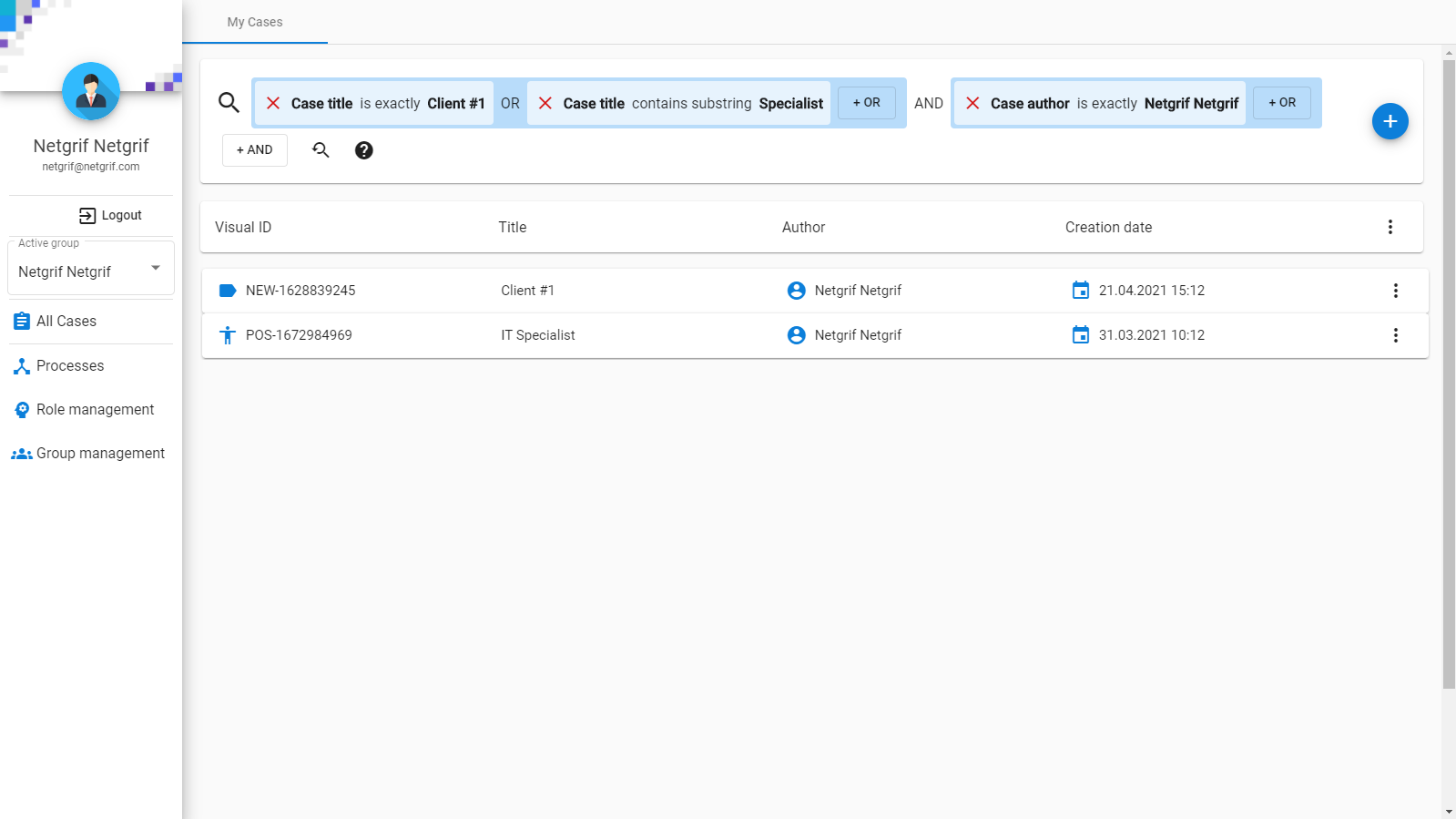

Searching

Once we have all this data and understand how the processes create and shape it, only one question remains – how do we search in it? We mentioned Elasticsearch already and we use it to store information about the data in the application and then to search for the data we need at any given moment. Cases and by extension also their data variables, which we marked for indexation, are indexed automatically and the index is updated whenever something about the case changes (most commonly one of its data variables). Users can use our intuitive search component to construct elaborate queries and find the cases or tasks they need. Developers can use the QueryDSL API to find the data they need within the Petriflow actions and if their requirements are too exotic, we don’t prevent them from accessing the databases and the data stored in them more directly.